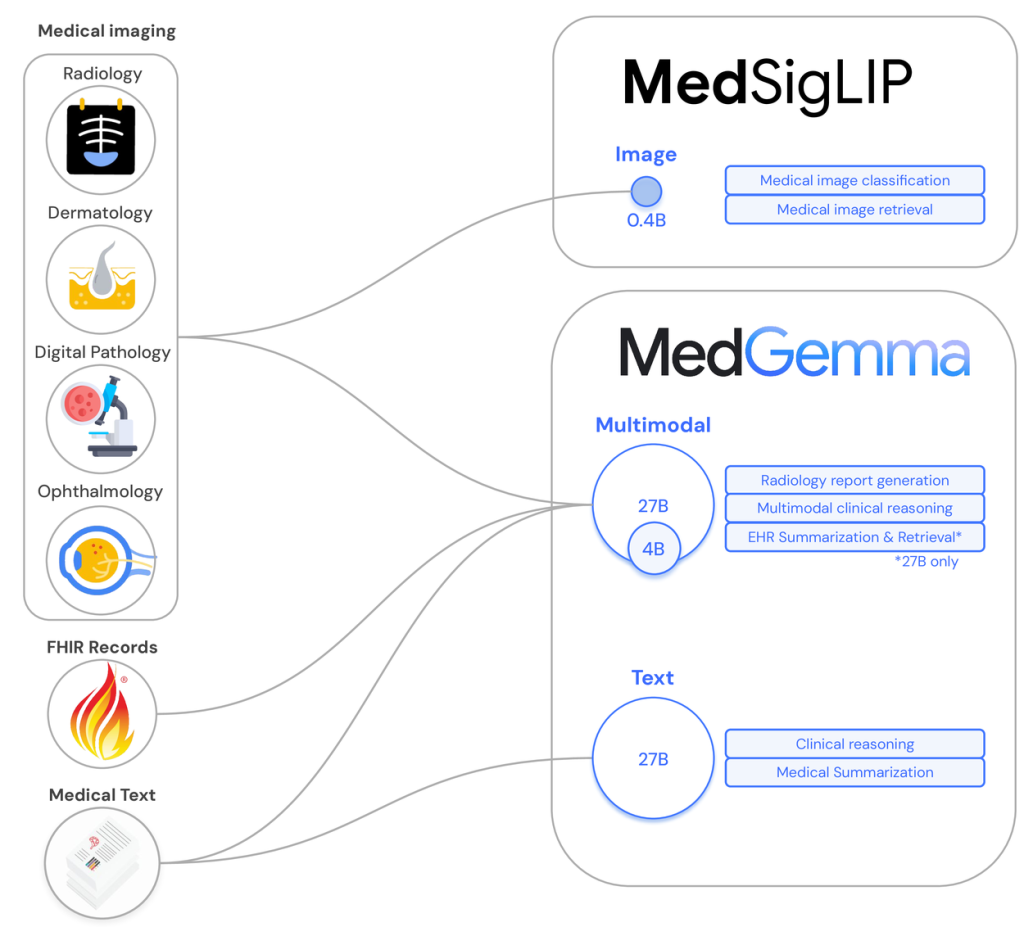

As first reported by AI News, Google has introduced a suite of open-source AI models—MedGemma 27B, MedGemma 4B, and MedSigLIP—designed specifically for healthcare. These models go beyond reading medical text; they can now interpret medical images such as chest X-rays, pathology slides, and dermatology photos alongside longitudinal patient records. The flagship MedGemma 27B model scored an impressive 87.7% on the MedQA benchmark while remaining far cheaper to run than larger, general-purpose models. This performance marks a significant step toward real-world clinical AI support, especially for under-resourced systems.

Source: research.google.

Its smaller sibling, MedGemma 4B, is optimized for efficiency but still delivers meaningful results, scoring 64.4% on the same benchmark. Radiologists reviewing its chest X-ray reports deemed 81% accurate enough to assist in patient care. Meanwhile, MedSigLIP, a lightweight vision-language model trained specifically on medical images, offers capabilities that general AI models lack—such as identifying medically significant patterns and enabling case-based image retrieval through multimodal understanding.

Real-world adoption signals practical value

Several global healthcare institutions are already piloting these models. DeepHealth in the U.S. reports that MedSigLIP aids in spotting issues radiologists might overlook, while Chang Gung Memorial Hospital in Taiwan confirms MedGemma’s effective use with traditional Chinese medical texts. In India, Tap Health has highlighted MedGemma’s notable strength: it avoids the factual hallucinations typical of general AI, maintaining clinical relevance and accuracy when context matters most. This reliability could prove vital as healthcare workflows increasingly integrate AI.

Google’s decision to open-source these models is key to their potential. It allows hospitals to deploy the AI locally, ensuring patient data never leaves their premises, while enabling researchers and developers to fine-tune the models for region-specific medical challenges. The models are lightweight enough to run on single GPUs and even mobile devices, democratizing access to high-quality AI tools in low-resource settings.

Google’s release of the MedGemma and MedSigLIP models signals a major advance in accessible, medically literate AI tools that respect both clinical standards and local data needs. These open-source models won’t replace doctors—but they offer powerful, affordable support that could expand quality care to regions and providers that need it most. As the global health system faces rising demand and strained resources, tools like these could serve as vital amplifiers of human expertise.